3 Deep learning

3.1 Deep neural network

3.1.1 Load data

# load the Pima Indians dataset from the mlbench dataset

library(mlbench)

data(PimaIndiansDiabetes)

# rename dataset to have shorter name because lazy

diabetes <- PimaIndiansDiabetes

data.set <- diabetes

# datatable(data.set[sample(nrow(data.set),

# replace = FALSE,

# size = 0.005 * nrow(data.set)), ])summary(data.set)## pregnant glucose pressure triceps

## Min. : 0.000 Min. : 0.0 Min. : 0.00 Min. : 0.00

## 1st Qu.: 1.000 1st Qu.: 99.0 1st Qu.: 62.00 1st Qu.: 0.00

## Median : 3.000 Median :117.0 Median : 72.00 Median :23.00

## Mean : 3.845 Mean :120.9 Mean : 69.11 Mean :20.54

## 3rd Qu.: 6.000 3rd Qu.:140.2 3rd Qu.: 80.00 3rd Qu.:32.00

## Max. :17.000 Max. :199.0 Max. :122.00 Max. :99.00

## insulin mass pedigree age diabetes

## Min. : 0.0 Min. : 0.00 Min. :0.0780 Min. :21.00 neg:500

## 1st Qu.: 0.0 1st Qu.:27.30 1st Qu.:0.2437 1st Qu.:24.00 pos:268

## Median : 30.5 Median :32.00 Median :0.3725 Median :29.00

## Mean : 79.8 Mean :31.99 Mean :0.4719 Mean :33.24

## 3rd Qu.:127.2 3rd Qu.:36.60 3rd Qu.:0.6262 3rd Qu.:41.00

## Max. :846.0 Max. :67.10 Max. :2.4200 Max. :81.003.1.2 Process data and variable

data.set$diabetes <- as.numeric(data.set$diabetes)

data.set$diabetes=data.set$diabetes-1

head(data.set$diabetes)## [1] 1 0 1 0 1 0head(data.set)## pregnant glucose pressure triceps insulin mass pedigree age diabetes

## 1 6 148 72 35 0 33.6 0.627 50 1

## 2 1 85 66 29 0 26.6 0.351 31 0

## 3 8 183 64 0 0 23.3 0.672 32 1

## 4 1 89 66 23 94 28.1 0.167 21 0

## 5 0 137 40 35 168 43.1 2.288 33 1

## 6 5 116 74 0 0 25.6 0.201 30 0str(data.set)## 'data.frame': 768 obs. of 9 variables:

## $ pregnant: num 6 1 8 1 0 5 3 10 2 8 ...

## $ glucose : num 148 85 183 89 137 116 78 115 197 125 ...

## $ pressure: num 72 66 64 66 40 74 50 0 70 96 ...

## $ triceps : num 35 29 0 23 35 0 32 0 45 0 ...

## $ insulin : num 0 0 0 94 168 0 88 0 543 0 ...

## $ mass : num 33.6 26.6 23.3 28.1 43.1 25.6 31 35.3 30.5 0 ...

## $ pedigree: num 0.627 0.351 0.672 0.167 2.288 ...

## $ age : num 50 31 32 21 33 30 26 29 53 54 ...

## $ diabetes: num 1 0 1 0 1 0 1 0 1 1 ...- transform dataframe into matrix

# Cast dataframe as a matrix

data.set <- as.matrix(data.set)

# Remove column names

dimnames(data.set) = NULLhead(data.set)## [,1] [,2] [,3] [,4] [,5] [,6] [,7] [,8] [,9]

## [1,] 6 148 72 35 0 33.6 0.627 50 1

## [2,] 1 85 66 29 0 26.6 0.351 31 0

## [3,] 8 183 64 0 0 23.3 0.672 32 1

## [4,] 1 89 66 23 94 28.1 0.167 21 0

## [5,] 0 137 40 35 168 43.1 2.288 33 1

## [6,] 5 116 74 0 0 25.6 0.201 30 03.1.3 Split data into training and test datasets

- including

xtrain ytrian xtest ytest

# Split for train and test data

set.seed(100)

indx <- sample(2,

nrow(data.set),

replace = TRUE,

prob = c(0.8, 0.2)) # Makes index with values 1 and 2# Select only the feature variables

# Take rows with index = 1

x_train <- data.set[indx == 1, 1:8]

x_test <- data.set[indx == 2, 1:8]# Feature Scaling

x_train <- scale(x_train )

x_test <- scale(x_test )y_test_actual <- data.set[indx == 2, 9]- transform target as on-hot-coding format

# Using similar indices to correspond to the training and test set

y_train <- to_categorical(data.set[indx == 1, 9])

y_test <- to_categorical(data.set[indx == 2, 9])

head(y_train)## [,1] [,2]

## [1,] 0 1

## [2,] 1 0

## [3,] 0 1

## [4,] 1 0

## [5,] 0 1

## [6,] 1 0head(data.set[indx == 1, 9],20)## [1] 1 0 1 0 1 0 0 1 1 0 0 1 1 1 1 1 0 1 0 0- dimension of four splitting data sets

dim(x_train)## [1] 609 8dim(y_train)## [1] 609 2dim(x_test)## [1] 159 8dim(y_test)## [1] 159 23.1.4 Creating neural network model

3.1.4.1 construction of model

- the output layer contains 3 levels

# Creating the model

model <- keras_model_sequential()

model %>%

layer_dense(name = "DeepLayer1",

units = 10,

activation = "relu",

input_shape = c(8)) %>%

# input 4 features

layer_dense(name = "DeepLayer2",

units = 10,

activation = "relu") %>%

layer_dense(name = "OutputLayer",

units = 2,

activation = "softmax")

# output 4 categories using one-hot-coding

summary(model)## Model: "sequential"

## ________________________________________________________________________________

## Layer (type) Output Shape Param #

## ================================================================================

## DeepLayer1 (Dense) (None, 10) 90

## DeepLayer2 (Dense) (None, 10) 110

## OutputLayer (Dense) (None, 2) 22

## ================================================================================

## Total params: 222

## Trainable params: 222

## Non-trainable params: 0

## ________________________________________________________________________________3.1.4.2 Compiling the model

# Compiling the model

model %>% compile(loss = "categorical_crossentropy",

optimizer = "adam",

metrics = c("accuracy"))3.1.4.3 Fitting the data and plot

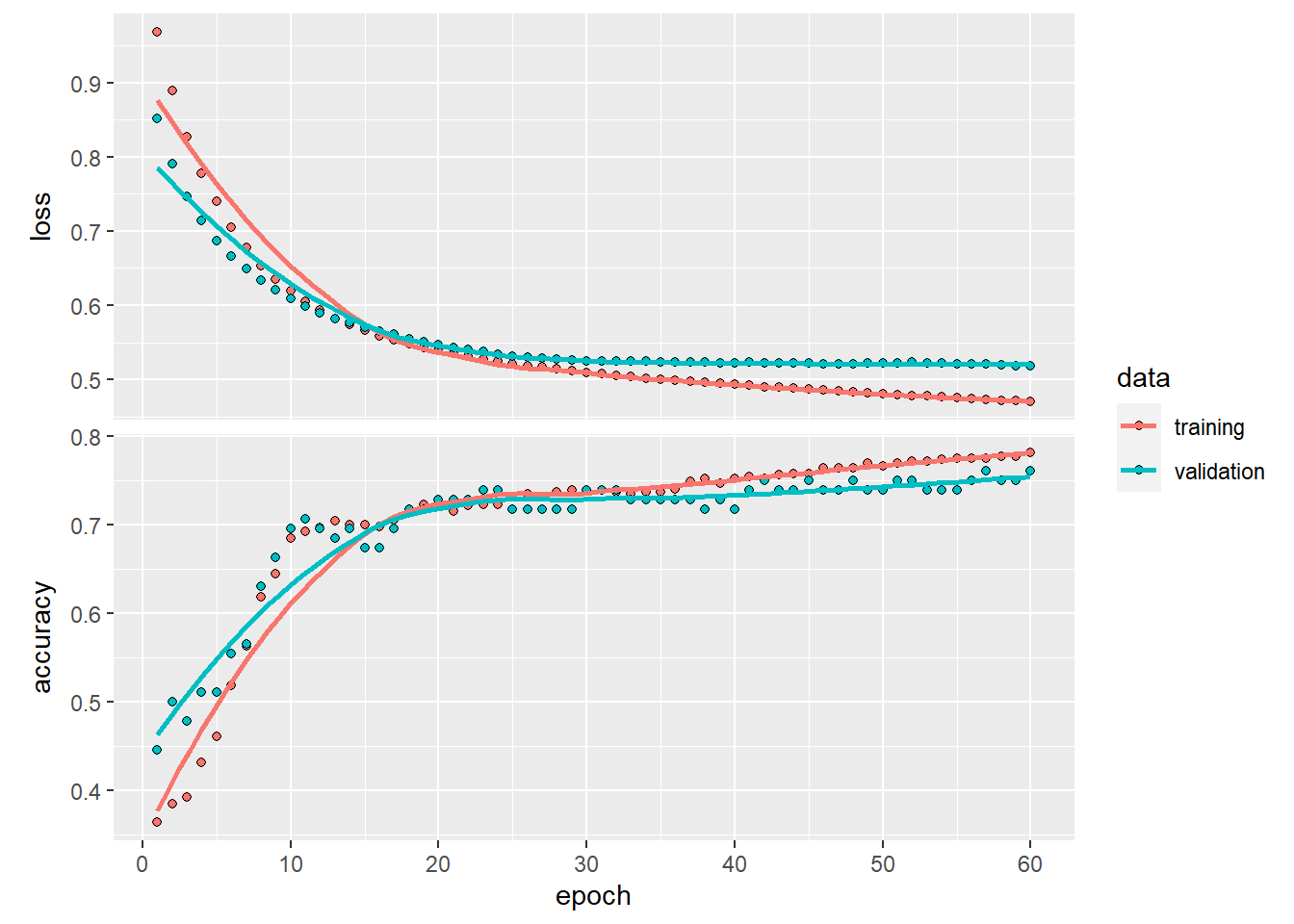

history <- model %>%

fit(x_train,

y_train,

# adjusting number of epoch

epoch = 60,

# adjusting number of batch size

batch_size = 64,

validation_split = 0.15,

verbose = 2)## Epoch 1/60

## 9/9 - 0s - loss: 0.9681 - accuracy: 0.3636 - val_loss: 0.8513 - val_accuracy: 0.4457 - 489ms/epoch - 54ms/step

## Epoch 2/60

## 9/9 - 0s - loss: 0.8899 - accuracy: 0.3849 - val_loss: 0.7903 - val_accuracy: 0.5000 - 52ms/epoch - 6ms/step

## Epoch 3/60

## 9/9 - 0s - loss: 0.8272 - accuracy: 0.3926 - val_loss: 0.7470 - val_accuracy: 0.4783 - 42ms/epoch - 5ms/step

## Epoch 4/60

## 9/9 - 0s - loss: 0.7779 - accuracy: 0.4313 - val_loss: 0.7140 - val_accuracy: 0.5109 - 34ms/epoch - 4ms/step

## Epoch 5/60

## 9/9 - 0s - loss: 0.7395 - accuracy: 0.4603 - val_loss: 0.6874 - val_accuracy: 0.5109 - 44ms/epoch - 5ms/step

## Epoch 6/60

## 9/9 - 0s - loss: 0.7049 - accuracy: 0.5184 - val_loss: 0.6661 - val_accuracy: 0.5543 - 58ms/epoch - 6ms/step

## Epoch 7/60

## 9/9 - 0s - loss: 0.6775 - accuracy: 0.5629 - val_loss: 0.6490 - val_accuracy: 0.5652 - 55ms/epoch - 6ms/step

## Epoch 8/60

## 9/9 - 0s - loss: 0.6535 - accuracy: 0.6190 - val_loss: 0.6335 - val_accuracy: 0.6304 - 40ms/epoch - 4ms/step

## Epoch 9/60

## 9/9 - 0s - loss: 0.6348 - accuracy: 0.6441 - val_loss: 0.6211 - val_accuracy: 0.6630 - 39ms/epoch - 4ms/step

## Epoch 10/60

## 9/9 - 0s - loss: 0.6195 - accuracy: 0.6847 - val_loss: 0.6093 - val_accuracy: 0.6957 - 39ms/epoch - 4ms/step

## Epoch 11/60

## 9/9 - 0s - loss: 0.6055 - accuracy: 0.6925 - val_loss: 0.5989 - val_accuracy: 0.7065 - 39ms/epoch - 4ms/step

## Epoch 12/60

## 9/9 - 0s - loss: 0.5937 - accuracy: 0.6963 - val_loss: 0.5898 - val_accuracy: 0.6957 - 45ms/epoch - 5ms/step

## Epoch 13/60

## 9/9 - 0s - loss: 0.5824 - accuracy: 0.7041 - val_loss: 0.5820 - val_accuracy: 0.6848 - 57ms/epoch - 6ms/step

## Epoch 14/60

## 9/9 - 0s - loss: 0.5737 - accuracy: 0.7002 - val_loss: 0.5761 - val_accuracy: 0.6957 - 42ms/epoch - 5ms/step

## Epoch 15/60

## 9/9 - 0s - loss: 0.5659 - accuracy: 0.7002 - val_loss: 0.5706 - val_accuracy: 0.6739 - 37ms/epoch - 4ms/step

## Epoch 16/60

## 9/9 - 0s - loss: 0.5590 - accuracy: 0.6983 - val_loss: 0.5650 - val_accuracy: 0.6739 - 36ms/epoch - 4ms/step

## Epoch 17/60

## 9/9 - 0s - loss: 0.5528 - accuracy: 0.7060 - val_loss: 0.5606 - val_accuracy: 0.6957 - 46ms/epoch - 5ms/step

## Epoch 18/60

## 9/9 - 0s - loss: 0.5478 - accuracy: 0.7176 - val_loss: 0.5548 - val_accuracy: 0.7174 - 45ms/epoch - 5ms/step

## Epoch 19/60

## 9/9 - 0s - loss: 0.5427 - accuracy: 0.7234 - val_loss: 0.5502 - val_accuracy: 0.7174 - 47ms/epoch - 5ms/step

## Epoch 20/60

## 9/9 - 0s - loss: 0.5385 - accuracy: 0.7234 - val_loss: 0.5465 - val_accuracy: 0.7283 - 47ms/epoch - 5ms/step

## Epoch 21/60

## 9/9 - 0s - loss: 0.5347 - accuracy: 0.7157 - val_loss: 0.5429 - val_accuracy: 0.7283 - 72ms/epoch - 8ms/step

## Epoch 22/60

## 9/9 - 0s - loss: 0.5308 - accuracy: 0.7215 - val_loss: 0.5398 - val_accuracy: 0.7283 - 50ms/epoch - 6ms/step

## Epoch 23/60

## 9/9 - 0s - loss: 0.5273 - accuracy: 0.7234 - val_loss: 0.5371 - val_accuracy: 0.7391 - 45ms/epoch - 5ms/step

## Epoch 24/60

## 9/9 - 0s - loss: 0.5241 - accuracy: 0.7234 - val_loss: 0.5341 - val_accuracy: 0.7391 - 58ms/epoch - 6ms/step

## Epoch 25/60

## 9/9 - 0s - loss: 0.5210 - accuracy: 0.7311 - val_loss: 0.5318 - val_accuracy: 0.7174 - 41ms/epoch - 5ms/step

## Epoch 26/60

## 9/9 - 0s - loss: 0.5187 - accuracy: 0.7350 - val_loss: 0.5303 - val_accuracy: 0.7174 - 42ms/epoch - 5ms/step

## Epoch 27/60

## 9/9 - 0s - loss: 0.5165 - accuracy: 0.7311 - val_loss: 0.5288 - val_accuracy: 0.7174 - 45ms/epoch - 5ms/step

## Epoch 28/60

## 9/9 - 0s - loss: 0.5142 - accuracy: 0.7369 - val_loss: 0.5273 - val_accuracy: 0.7174 - 65ms/epoch - 7ms/step

## Epoch 29/60

## 9/9 - 0s - loss: 0.5117 - accuracy: 0.7389 - val_loss: 0.5259 - val_accuracy: 0.7174 - 50ms/epoch - 6ms/step

## Epoch 30/60

## 9/9 - 0s - loss: 0.5097 - accuracy: 0.7389 - val_loss: 0.5248 - val_accuracy: 0.7391 - 47ms/epoch - 5ms/step

## Epoch 31/60

## 9/9 - 0s - loss: 0.5081 - accuracy: 0.7389 - val_loss: 0.5245 - val_accuracy: 0.7391 - 44ms/epoch - 5ms/step

## Epoch 32/60

## 9/9 - 0s - loss: 0.5059 - accuracy: 0.7369 - val_loss: 0.5243 - val_accuracy: 0.7391 - 48ms/epoch - 5ms/step

## Epoch 33/60

## 9/9 - 0s - loss: 0.5038 - accuracy: 0.7350 - val_loss: 0.5242 - val_accuracy: 0.7283 - 42ms/epoch - 5ms/step

## Epoch 34/60

## 9/9 - 0s - loss: 0.5018 - accuracy: 0.7369 - val_loss: 0.5242 - val_accuracy: 0.7283 - 61ms/epoch - 7ms/step

## Epoch 35/60

## 9/9 - 0s - loss: 0.5003 - accuracy: 0.7369 - val_loss: 0.5238 - val_accuracy: 0.7283 - 54ms/epoch - 6ms/step

## Epoch 36/60

## 9/9 - 0s - loss: 0.4988 - accuracy: 0.7408 - val_loss: 0.5237 - val_accuracy: 0.7283 - 48ms/epoch - 5ms/step

## Epoch 37/60

## 9/9 - 0s - loss: 0.4977 - accuracy: 0.7485 - val_loss: 0.5234 - val_accuracy: 0.7283 - 40ms/epoch - 4ms/step

## Epoch 38/60

## 9/9 - 0s - loss: 0.4961 - accuracy: 0.7524 - val_loss: 0.5232 - val_accuracy: 0.7174 - 42ms/epoch - 5ms/step

## Epoch 39/60

## 9/9 - 0s - loss: 0.4948 - accuracy: 0.7466 - val_loss: 0.5227 - val_accuracy: 0.7283 - 39ms/epoch - 4ms/step

## Epoch 40/60

## 9/9 - 0s - loss: 0.4934 - accuracy: 0.7524 - val_loss: 0.5227 - val_accuracy: 0.7174 - 39ms/epoch - 4ms/step

## Epoch 41/60

## 9/9 - 0s - loss: 0.4919 - accuracy: 0.7544 - val_loss: 0.5231 - val_accuracy: 0.7391 - 45ms/epoch - 5ms/step

## Epoch 42/60

## 9/9 - 0s - loss: 0.4903 - accuracy: 0.7524 - val_loss: 0.5228 - val_accuracy: 0.7500 - 40ms/epoch - 4ms/step

## Epoch 43/60

## 9/9 - 0s - loss: 0.4892 - accuracy: 0.7563 - val_loss: 0.5223 - val_accuracy: 0.7391 - 33ms/epoch - 4ms/step

## Epoch 44/60

## 9/9 - 0s - loss: 0.4882 - accuracy: 0.7582 - val_loss: 0.5221 - val_accuracy: 0.7391 - 37ms/epoch - 4ms/step

## Epoch 45/60

## 9/9 - 0s - loss: 0.4876 - accuracy: 0.7582 - val_loss: 0.5220 - val_accuracy: 0.7500 - 44ms/epoch - 5ms/step

## Epoch 46/60

## 9/9 - 0s - loss: 0.4862 - accuracy: 0.7640 - val_loss: 0.5213 - val_accuracy: 0.7391 - 43ms/epoch - 5ms/step

## Epoch 47/60

## 9/9 - 0s - loss: 0.4843 - accuracy: 0.7640 - val_loss: 0.5209 - val_accuracy: 0.7391 - 33ms/epoch - 4ms/step

## Epoch 48/60

## 9/9 - 0s - loss: 0.4830 - accuracy: 0.7640 - val_loss: 0.5212 - val_accuracy: 0.7500 - 35ms/epoch - 4ms/step

## Epoch 49/60

## 9/9 - 0s - loss: 0.4820 - accuracy: 0.7698 - val_loss: 0.5218 - val_accuracy: 0.7391 - 35ms/epoch - 4ms/step

## Epoch 50/60

## 9/9 - 0s - loss: 0.4806 - accuracy: 0.7660 - val_loss: 0.5224 - val_accuracy: 0.7391 - 37ms/epoch - 4ms/step

## Epoch 51/60

## 9/9 - 0s - loss: 0.4796 - accuracy: 0.7698 - val_loss: 0.5224 - val_accuracy: 0.7500 - 39ms/epoch - 4ms/step

## Epoch 52/60

## 9/9 - 0s - loss: 0.4780 - accuracy: 0.7718 - val_loss: 0.5229 - val_accuracy: 0.7500 - 35ms/epoch - 4ms/step

## Epoch 53/60

## 9/9 - 0s - loss: 0.4775 - accuracy: 0.7718 - val_loss: 0.5224 - val_accuracy: 0.7391 - 36ms/epoch - 4ms/step

## Epoch 54/60

## 9/9 - 0s - loss: 0.4762 - accuracy: 0.7737 - val_loss: 0.5221 - val_accuracy: 0.7391 - 37ms/epoch - 4ms/step

## Epoch 55/60

## 9/9 - 0s - loss: 0.4755 - accuracy: 0.7756 - val_loss: 0.5212 - val_accuracy: 0.7391 - 36ms/epoch - 4ms/step

## Epoch 56/60

## 9/9 - 0s - loss: 0.4741 - accuracy: 0.7756 - val_loss: 0.5209 - val_accuracy: 0.7500 - 32ms/epoch - 4ms/step

## Epoch 57/60

## 9/9 - 0s - loss: 0.4731 - accuracy: 0.7756 - val_loss: 0.5209 - val_accuracy: 0.7609 - 34ms/epoch - 4ms/step

## Epoch 58/60

## 9/9 - 0s - loss: 0.4720 - accuracy: 0.7776 - val_loss: 0.5192 - val_accuracy: 0.7500 - 39ms/epoch - 4ms/step

## Epoch 59/60

## 9/9 - 0s - loss: 0.4711 - accuracy: 0.7776 - val_loss: 0.5188 - val_accuracy: 0.7500 - 36ms/epoch - 4ms/step

## Epoch 60/60

## 9/9 - 0s - loss: 0.4699 - accuracy: 0.7814 - val_loss: 0.5187 - val_accuracy: 0.7609 - 36ms/epoch - 4ms/stepplot(history)

3.1.5 Evaluation

3.1.5.1 Output loss and accuracy

using xtest and ytest data sets to evaluate the built model directly

model %>%

evaluate(x_test,

y_test)##

## 1/5 [=====>........................] - ETA: 0s - loss: 0.4753 - accuracy: 0.8125

## 5/5 [==============================] - 0s 1ms/step - loss: 0.4506 - accuracy: 0.7925## loss accuracy

## 0.4506253 0.79245283.1.5.2 Output the predicted classes and confusion matrix

pred <- model %>%

predict(x_test) %>% k_argmax() %>% k_get_value()

head(pred)## [1] 0 1 0 1 0 0table(Predicted = pred,

Actual = y_test_actual)## Actual

## Predicted 0 1

## 0 92 21

## 1 12 343.1.5.3 Output the predicted values

prob <- model %>%

predict(x_test) %>% k_get_value()

head(prob)## [,1] [,2]

## [1,] 0.9680360 0.03196405

## [2,] 0.2276911 0.77230895

## [3,] 0.9635938 0.03640625

## [4,] 0.3521949 0.64780515

## [5,] 0.9190423 0.08095762

## [6,] 0.7750926 0.224907383.1.5.4 Comparison between prob, pred, and ytest

comparison <- cbind(prob ,

pred ,

y_test_actual )

head(comparison)## pred y_test_actual

## [1,] 0.9680360 0.03196405 0 1

## [2,] 0.2276911 0.77230895 1 1

## [3,] 0.9635938 0.03640625 0 0

## [4,] 0.3521949 0.64780515 1 1

## [5,] 0.9190423 0.08095762 0 0

## [6,] 0.7750926 0.22490738 0 03.2 Deep neural networks for regression

3.2.1 Loading packages and data sets

library(readr)

library(keras)

library(plotly)data("Boston", package = "MASS")

data.set <- Bostondim(data.set)## [1] 506 143.2.2 Convert dataframe to matrix without dimnames

library(DT)

# Cast dataframe as a matrix

data.set <- as.matrix(data.set)

# Remove column names

dimnames(data.set) = NULL

head(data.set)## [,1] [,2] [,3] [,4] [,5] [,6] [,7] [,8] [,9] [,10] [,11] [,12]

## [1,] 0.00632 18 2.31 0 0.538 6.575 65.2 4.0900 1 296 15.3 396.90

## [2,] 0.02731 0 7.07 0 0.469 6.421 78.9 4.9671 2 242 17.8 396.90

## [3,] 0.02729 0 7.07 0 0.469 7.185 61.1 4.9671 2 242 17.8 392.83

## [4,] 0.03237 0 2.18 0 0.458 6.998 45.8 6.0622 3 222 18.7 394.63

## [5,] 0.06905 0 2.18 0 0.458 7.147 54.2 6.0622 3 222 18.7 396.90

## [6,] 0.02985 0 2.18 0 0.458 6.430 58.7 6.0622 3 222 18.7 394.12

## [,13] [,14]

## [1,] 4.98 24.0

## [2,] 9.14 21.6

## [3,] 4.03 34.7

## [4,] 2.94 33.4

## [5,] 5.33 36.2

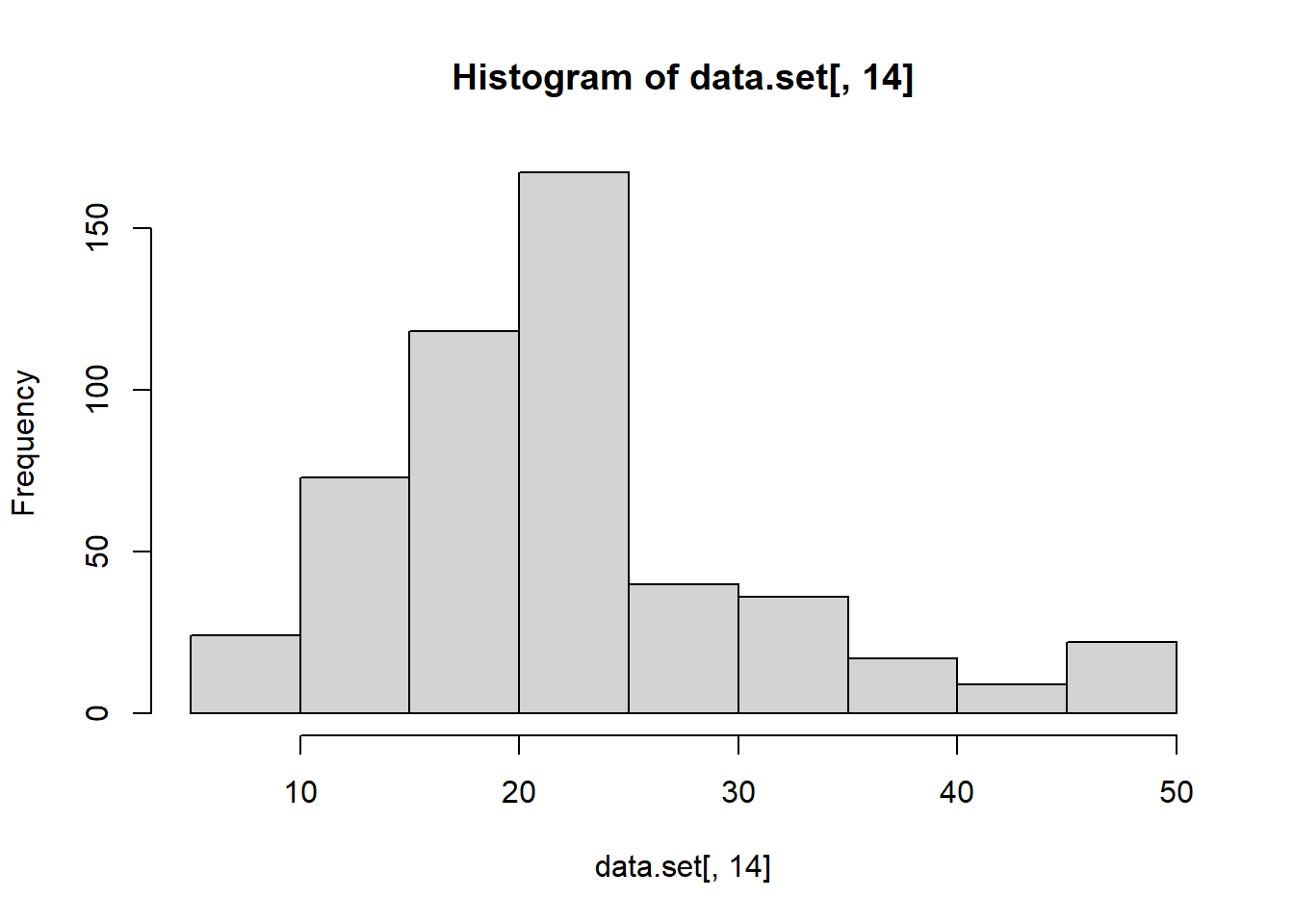

## [6,] 5.21 28.7summary(data.set[, 14])## Min. 1st Qu. Median Mean 3rd Qu. Max.

## 5.00 17.02 21.20 22.53 25.00 50.00 hist( data.set[, 14])

(#fig:target variable histogram)Fig 1 Histogram of the target variable

3.2.3 Spiting training and test data

# Split for train and test data

set.seed(123)

indx <- sample(2,

nrow(data.set),

replace = TRUE,

prob = c(0.75, 0.25)) # Makes index with values 1 and 2x_train <- data.set[indx == 1, 1:13]

x_test <- data.set[indx == 2, 1:13]

y_train <- data.set[indx == 1, 14]

y_test <- data.set[indx == 2, 14]3.2.5 Creating the model

model <- keras_model_sequential() %>%

layer_dense(units = 25,

activation = "relu",

input_shape = c(13)) %>%

layer_dropout(0.2) %>%

layer_dense(units = 25,

activation = "relu") %>%

layer_dropout(0.2) %>%

layer_dense(units = 25,

activation = "relu") %>%

layer_dropout(0.2) %>%

layer_dense(units = 1)model %>% summary()## Model: "sequential_1"

## ________________________________________________________________________________

## Layer (type) Output Shape Param #

## ================================================================================

## dense_3 (Dense) (None, 25) 350

## dropout_2 (Dropout) (None, 25) 0

## dense_2 (Dense) (None, 25) 650

## dropout_1 (Dropout) (None, 25) 0

## dense_1 (Dense) (None, 25) 650

## dropout (Dropout) (None, 25) 0

## dense (Dense) (None, 1) 26

## ================================================================================

## Total params: 1,676

## Trainable params: 1,676

## Non-trainable params: 0

## ________________________________________________________________________________model %>% get_config()## {'name': 'sequential_1', 'layers': [{'class_name': 'InputLayer', 'config': {'batch_input_shape': (None, 13), 'dtype': 'float32', 'sparse': False, 'ragged': False, 'name': 'dense_3_input'}}, {'class_name': 'Dense', 'config': {'name': 'dense_3', 'trainable': True, 'batch_input_shape': (None, 13), 'dtype': 'float32', 'units': 25, 'activation': 'relu', 'use_bias': True, 'kernel_initializer': {'class_name': 'GlorotUniform', 'config': {'seed': None}}, 'bias_initializer': {'class_name': 'Zeros', 'config': {}}, 'kernel_regularizer': None, 'bias_regularizer': None, 'activity_regularizer': None, 'kernel_constraint': None, 'bias_constraint': None}}, {'class_name': 'Dropout', 'config': {'name': 'dropout_2', 'trainable': True, 'dtype': 'float32', 'rate': 0.2, 'noise_shape': None, 'seed': None}}, {'class_name': 'Dense', 'config': {'name': 'dense_2', 'trainable': True, 'dtype': 'float32', 'units': 25, 'activation': 'relu', 'use_bias': True, 'kernel_initializer': {'class_name': 'GlorotUniform', 'config': {'seed': None}}, 'bias_initializer': {'class_name': 'Zeros', 'config': {}}, 'kernel_regularizer': None, 'bias_regularizer': None, 'activity_regularizer': None, 'kernel_constraint': None, 'bias_constraint': None}}, {'class_name': 'Dropout', 'config': {'name': 'dropout_1', 'trainable': True, 'dtype': 'float32', 'rate': 0.2, 'noise_shape': None, 'seed': None}}, {'class_name': 'Dense', 'config': {'name': 'dense_1', 'trainable': True, 'dtype': 'float32', 'units': 25, 'activation': 'relu', 'use_bias': True, 'kernel_initializer': {'class_name': 'GlorotUniform', 'config': {'seed': None}}, 'bias_initializer': {'class_name': 'Zeros', 'config': {}}, 'kernel_regularizer': None, 'bias_regularizer': None, 'activity_regularizer': None, 'kernel_constraint': None, 'bias_constraint': None}}, {'class_name': 'Dropout', 'config': {'name': 'dropout', 'trainable': True, 'dtype': 'float32', 'rate': 0.2, 'noise_shape': None, 'seed': None}}, {'class_name': 'Dense', 'config': {'name': 'dense', 'trainable': True, 'dtype': 'float32', 'units': 1, 'activation': 'linear', 'use_bias': True, 'kernel_initializer': {'class_name': 'GlorotUniform', 'config': {'seed': None}}, 'bias_initializer': {'class_name': 'Zeros', 'config': {}}, 'kernel_regularizer': None, 'bias_regularizer': None, 'activity_regularizer': None, 'kernel_constraint': None, 'bias_constraint': None}}]}3.2.6 Compiling the model

model %>% compile(loss = "mse",

optimizer = optimizer_rmsprop(),

metrics = c("mean_absolute_error"))3.2.7 Fitting the model

history <- model %>%

fit(x_train,

y_train,

epoch = 100,

batch_size = 64,

validation_split = 0.1,

callbacks = c(callback_early_stopping(monitor = "val_mean_absolute_error",

patience = 5)),

verbose = 2)## Epoch 1/100

## 6/6 - 1s - loss: 633.1686 - mean_absolute_error: 23.1448 - val_loss: 362.1218 - val_mean_absolute_error: 18.6922 - 543ms/epoch - 90ms/step

## Epoch 2/100

## 6/6 - 0s - loss: 619.5684 - mean_absolute_error: 22.8226 - val_loss: 353.5802 - val_mean_absolute_error: 18.4510 - 29ms/epoch - 5ms/step

## Epoch 3/100

## 6/6 - 0s - loss: 606.3226 - mean_absolute_error: 22.4991 - val_loss: 343.4781 - val_mean_absolute_error: 18.1589 - 28ms/epoch - 5ms/step

## Epoch 4/100

## 6/6 - 0s - loss: 591.8018 - mean_absolute_error: 22.1299 - val_loss: 331.8621 - val_mean_absolute_error: 17.8183 - 29ms/epoch - 5ms/step

## Epoch 5/100

## 6/6 - 0s - loss: 575.3328 - mean_absolute_error: 21.7065 - val_loss: 318.4845 - val_mean_absolute_error: 17.4185 - 28ms/epoch - 5ms/step

## Epoch 6/100

## 6/6 - 0s - loss: 556.1640 - mean_absolute_error: 21.2277 - val_loss: 303.3835 - val_mean_absolute_error: 16.9556 - 29ms/epoch - 5ms/step

## Epoch 7/100

## 6/6 - 0s - loss: 527.6621 - mean_absolute_error: 20.5617 - val_loss: 285.5919 - val_mean_absolute_error: 16.3955 - 30ms/epoch - 5ms/step

## Epoch 8/100

## 6/6 - 0s - loss: 506.4395 - mean_absolute_error: 20.0282 - val_loss: 267.2332 - val_mean_absolute_error: 15.7963 - 30ms/epoch - 5ms/step

## Epoch 9/100

## 6/6 - 0s - loss: 469.5865 - mean_absolute_error: 19.0669 - val_loss: 247.6484 - val_mean_absolute_error: 15.1307 - 33ms/epoch - 5ms/step

## Epoch 10/100

## 6/6 - 0s - loss: 429.0699 - mean_absolute_error: 18.1442 - val_loss: 227.3992 - val_mean_absolute_error: 14.4101 - 35ms/epoch - 6ms/step

## Epoch 11/100

## 6/6 - 0s - loss: 388.9806 - mean_absolute_error: 17.1307 - val_loss: 205.8531 - val_mean_absolute_error: 13.6039 - 29ms/epoch - 5ms/step

## Epoch 12/100

## 6/6 - 0s - loss: 345.7701 - mean_absolute_error: 16.0031 - val_loss: 185.8886 - val_mean_absolute_error: 12.8119 - 31ms/epoch - 5ms/step

## Epoch 13/100

## 6/6 - 0s - loss: 310.5396 - mean_absolute_error: 14.8583 - val_loss: 166.5514 - val_mean_absolute_error: 12.0074 - 37ms/epoch - 6ms/step

## Epoch 14/100

## 6/6 - 0s - loss: 265.3821 - mean_absolute_error: 13.4096 - val_loss: 146.0776 - val_mean_absolute_error: 11.0859 - 46ms/epoch - 8ms/step

## Epoch 15/100

## 6/6 - 0s - loss: 220.2564 - mean_absolute_error: 12.1002 - val_loss: 126.8281 - val_mean_absolute_error: 10.1527 - 37ms/epoch - 6ms/step

## Epoch 16/100

## 6/6 - 0s - loss: 193.3011 - mean_absolute_error: 11.1827 - val_loss: 110.3338 - val_mean_absolute_error: 9.3306 - 37ms/epoch - 6ms/step

## Epoch 17/100

## 6/6 - 0s - loss: 152.6866 - mean_absolute_error: 9.6193 - val_loss: 94.0219 - val_mean_absolute_error: 8.5289 - 31ms/epoch - 5ms/step

## Epoch 18/100

## 6/6 - 0s - loss: 143.4529 - mean_absolute_error: 9.3241 - val_loss: 82.9254 - val_mean_absolute_error: 7.9897 - 29ms/epoch - 5ms/step

## Epoch 19/100

## 6/6 - 0s - loss: 108.7542 - mean_absolute_error: 7.8017 - val_loss: 75.9578 - val_mean_absolute_error: 7.6571 - 28ms/epoch - 5ms/step

## Epoch 20/100

## 6/6 - 0s - loss: 112.6149 - mean_absolute_error: 7.8218 - val_loss: 67.7028 - val_mean_absolute_error: 7.2327 - 29ms/epoch - 5ms/step

## Epoch 21/100

## 6/6 - 0s - loss: 94.2892 - mean_absolute_error: 7.1888 - val_loss: 65.4072 - val_mean_absolute_error: 7.0785 - 28ms/epoch - 5ms/step

## Epoch 22/100

## 6/6 - 0s - loss: 95.8447 - mean_absolute_error: 7.0795 - val_loss: 60.8710 - val_mean_absolute_error: 6.7702 - 30ms/epoch - 5ms/step

## Epoch 23/100

## 6/6 - 0s - loss: 81.0740 - mean_absolute_error: 6.7073 - val_loss: 58.3951 - val_mean_absolute_error: 6.5841 - 30ms/epoch - 5ms/step

## Epoch 24/100

## 6/6 - 0s - loss: 70.8558 - mean_absolute_error: 6.4321 - val_loss: 56.3403 - val_mean_absolute_error: 6.4243 - 31ms/epoch - 5ms/step

## Epoch 25/100

## 6/6 - 0s - loss: 86.6767 - mean_absolute_error: 6.9435 - val_loss: 52.0283 - val_mean_absolute_error: 6.0943 - 29ms/epoch - 5ms/step

## Epoch 26/100

## 6/6 - 0s - loss: 75.3748 - mean_absolute_error: 6.4401 - val_loss: 51.1953 - val_mean_absolute_error: 6.0465 - 29ms/epoch - 5ms/step

## Epoch 27/100

## 6/6 - 0s - loss: 67.8355 - mean_absolute_error: 6.0516 - val_loss: 54.1734 - val_mean_absolute_error: 6.2449 - 29ms/epoch - 5ms/step

## Epoch 28/100

## 6/6 - 0s - loss: 77.4505 - mean_absolute_error: 6.6767 - val_loss: 54.5568 - val_mean_absolute_error: 6.2994 - 28ms/epoch - 5ms/step

## Epoch 29/100

## 6/6 - 0s - loss: 61.7278 - mean_absolute_error: 5.7904 - val_loss: 51.2587 - val_mean_absolute_error: 6.1204 - 28ms/epoch - 5ms/step

## Epoch 30/100

## 6/6 - 0s - loss: 71.2910 - mean_absolute_error: 6.3351 - val_loss: 52.1180 - val_mean_absolute_error: 6.2051 - 29ms/epoch - 5ms/step

## Epoch 31/100

## 6/6 - 0s - loss: 68.1252 - mean_absolute_error: 6.1091 - val_loss: 47.0658 - val_mean_absolute_error: 5.8783 - 29ms/epoch - 5ms/step

## Epoch 32/100

## 6/6 - 0s - loss: 58.1343 - mean_absolute_error: 5.8253 - val_loss: 46.6667 - val_mean_absolute_error: 5.8744 - 30ms/epoch - 5ms/step

## Epoch 33/100

## 6/6 - 0s - loss: 57.8563 - mean_absolute_error: 5.6244 - val_loss: 44.8589 - val_mean_absolute_error: 5.7674 - 30ms/epoch - 5ms/step

## Epoch 34/100

## 6/6 - 0s - loss: 63.9937 - mean_absolute_error: 5.9953 - val_loss: 43.0551 - val_mean_absolute_error: 5.6553 - 31ms/epoch - 5ms/step

## Epoch 35/100

## 6/6 - 0s - loss: 63.4491 - mean_absolute_error: 5.9678 - val_loss: 41.5887 - val_mean_absolute_error: 5.5499 - 28ms/epoch - 5ms/step

## Epoch 36/100

## 6/6 - 0s - loss: 64.7595 - mean_absolute_error: 5.8879 - val_loss: 38.9553 - val_mean_absolute_error: 5.3575 - 32ms/epoch - 5ms/step

## Epoch 37/100

## 6/6 - 0s - loss: 63.6228 - mean_absolute_error: 5.9375 - val_loss: 39.8530 - val_mean_absolute_error: 5.4718 - 29ms/epoch - 5ms/step

## Epoch 38/100

## 6/6 - 0s - loss: 62.6301 - mean_absolute_error: 5.7237 - val_loss: 37.3205 - val_mean_absolute_error: 5.2747 - 29ms/epoch - 5ms/step

## Epoch 39/100

## 6/6 - 0s - loss: 60.3906 - mean_absolute_error: 5.7724 - val_loss: 35.2879 - val_mean_absolute_error: 5.1099 - 29ms/epoch - 5ms/step

## Epoch 40/100

## 6/6 - 0s - loss: 52.7585 - mean_absolute_error: 5.4360 - val_loss: 34.7210 - val_mean_absolute_error: 5.0942 - 30ms/epoch - 5ms/step

## Epoch 41/100

## 6/6 - 0s - loss: 51.7187 - mean_absolute_error: 5.2884 - val_loss: 30.2621 - val_mean_absolute_error: 4.7098 - 29ms/epoch - 5ms/step

## Epoch 42/100

## 6/6 - 0s - loss: 53.5071 - mean_absolute_error: 5.4761 - val_loss: 28.5758 - val_mean_absolute_error: 4.5740 - 30ms/epoch - 5ms/step

## Epoch 43/100

## 6/6 - 0s - loss: 52.8232 - mean_absolute_error: 5.5496 - val_loss: 28.6857 - val_mean_absolute_error: 4.5879 - 34ms/epoch - 6ms/step

## Epoch 44/100

## 6/6 - 0s - loss: 49.7628 - mean_absolute_error: 5.2005 - val_loss: 28.9877 - val_mean_absolute_error: 4.6011 - 30ms/epoch - 5ms/step

## Epoch 45/100

## 6/6 - 0s - loss: 51.1762 - mean_absolute_error: 5.3729 - val_loss: 27.6649 - val_mean_absolute_error: 4.5036 - 30ms/epoch - 5ms/step

## Epoch 46/100

## 6/6 - 0s - loss: 56.5504 - mean_absolute_error: 5.7260 - val_loss: 25.6642 - val_mean_absolute_error: 4.3385 - 27ms/epoch - 5ms/step

## Epoch 47/100

## 6/6 - 0s - loss: 51.8396 - mean_absolute_error: 5.1717 - val_loss: 26.9569 - val_mean_absolute_error: 4.4545 - 28ms/epoch - 5ms/step

## Epoch 48/100

## 6/6 - 0s - loss: 44.6086 - mean_absolute_error: 5.2793 - val_loss: 24.8331 - val_mean_absolute_error: 4.2759 - 30ms/epoch - 5ms/step

## Epoch 49/100

## 6/6 - 0s - loss: 44.1259 - mean_absolute_error: 4.8670 - val_loss: 23.5274 - val_mean_absolute_error: 4.1741 - 30ms/epoch - 5ms/step

## Epoch 50/100

## 6/6 - 0s - loss: 50.5595 - mean_absolute_error: 5.1824 - val_loss: 21.8385 - val_mean_absolute_error: 4.0405 - 33ms/epoch - 5ms/step

## Epoch 51/100

## 6/6 - 0s - loss: 49.8013 - mean_absolute_error: 5.3196 - val_loss: 23.6437 - val_mean_absolute_error: 4.1954 - 29ms/epoch - 5ms/step

## Epoch 52/100

## 6/6 - 0s - loss: 47.3392 - mean_absolute_error: 5.1700 - val_loss: 21.5459 - val_mean_absolute_error: 4.0008 - 29ms/epoch - 5ms/step

## Epoch 53/100

## 6/6 - 0s - loss: 49.1827 - mean_absolute_error: 5.3445 - val_loss: 22.0266 - val_mean_absolute_error: 4.0278 - 29ms/epoch - 5ms/step

## Epoch 54/100

## 6/6 - 0s - loss: 49.9451 - mean_absolute_error: 5.2521 - val_loss: 23.2426 - val_mean_absolute_error: 4.1344 - 29ms/epoch - 5ms/step

## Epoch 55/100

## 6/6 - 0s - loss: 48.2228 - mean_absolute_error: 5.2593 - val_loss: 22.7446 - val_mean_absolute_error: 4.0828 - 29ms/epoch - 5ms/step

## Epoch 56/100

## 6/6 - 0s - loss: 47.5721 - mean_absolute_error: 4.9321 - val_loss: 20.9730 - val_mean_absolute_error: 3.9223 - 29ms/epoch - 5ms/step

## Epoch 57/100

## 6/6 - 0s - loss: 49.8960 - mean_absolute_error: 4.9387 - val_loss: 19.2941 - val_mean_absolute_error: 3.7518 - 28ms/epoch - 5ms/step

## Epoch 58/100

## 6/6 - 0s - loss: 43.6015 - mean_absolute_error: 4.9093 - val_loss: 20.2174 - val_mean_absolute_error: 3.8490 - 28ms/epoch - 5ms/step

## Epoch 59/100

## 6/6 - 0s - loss: 49.6302 - mean_absolute_error: 5.1938 - val_loss: 19.3697 - val_mean_absolute_error: 3.7527 - 30ms/epoch - 5ms/step

## Epoch 60/100

## 6/6 - 0s - loss: 44.7460 - mean_absolute_error: 5.0207 - val_loss: 17.5183 - val_mean_absolute_error: 3.5735 - 29ms/epoch - 5ms/step

## Epoch 61/100

## 6/6 - 0s - loss: 45.6789 - mean_absolute_error: 5.0399 - val_loss: 17.6852 - val_mean_absolute_error: 3.5582 - 29ms/epoch - 5ms/step

## Epoch 62/100

## 6/6 - 0s - loss: 41.7331 - mean_absolute_error: 4.8633 - val_loss: 17.3254 - val_mean_absolute_error: 3.5304 - 31ms/epoch - 5ms/step

## Epoch 63/100

## 6/6 - 0s - loss: 44.0637 - mean_absolute_error: 5.0094 - val_loss: 15.9025 - val_mean_absolute_error: 3.3479 - 33ms/epoch - 6ms/step

## Epoch 64/100

## 6/6 - 0s - loss: 50.0619 - mean_absolute_error: 5.1017 - val_loss: 16.0546 - val_mean_absolute_error: 3.3891 - 33ms/epoch - 5ms/step

## Epoch 65/100

## 6/6 - 0s - loss: 54.1918 - mean_absolute_error: 5.3302 - val_loss: 15.3276 - val_mean_absolute_error: 3.2750 - 33ms/epoch - 6ms/step

## Epoch 66/100

## 6/6 - 0s - loss: 45.8594 - mean_absolute_error: 5.0630 - val_loss: 16.5450 - val_mean_absolute_error: 3.4201 - 29ms/epoch - 5ms/step

## Epoch 67/100

## 6/6 - 0s - loss: 37.5054 - mean_absolute_error: 4.6813 - val_loss: 15.5897 - val_mean_absolute_error: 3.2986 - 32ms/epoch - 5ms/step

## Epoch 68/100

## 6/6 - 0s - loss: 41.1114 - mean_absolute_error: 4.7638 - val_loss: 14.9583 - val_mean_absolute_error: 3.2099 - 39ms/epoch - 6ms/step

## Epoch 69/100

## 6/6 - 0s - loss: 42.1530 - mean_absolute_error: 4.7229 - val_loss: 14.8981 - val_mean_absolute_error: 3.2077 - 30ms/epoch - 5ms/step

## Epoch 70/100

## 6/6 - 0s - loss: 36.7822 - mean_absolute_error: 4.6359 - val_loss: 14.9601 - val_mean_absolute_error: 3.2092 - 30ms/epoch - 5ms/step

## Epoch 71/100

## 6/6 - 0s - loss: 42.6609 - mean_absolute_error: 4.7775 - val_loss: 15.8314 - val_mean_absolute_error: 3.3245 - 30ms/epoch - 5ms/step

## Epoch 72/100

## 6/6 - 0s - loss: 46.2637 - mean_absolute_error: 4.8777 - val_loss: 14.4350 - val_mean_absolute_error: 3.1350 - 46ms/epoch - 8ms/step

## Epoch 73/100

## 6/6 - 0s - loss: 42.7639 - mean_absolute_error: 4.9785 - val_loss: 14.7951 - val_mean_absolute_error: 3.1797 - 37ms/epoch - 6ms/step

## Epoch 74/100

## 6/6 - 0s - loss: 41.9135 - mean_absolute_error: 4.8354 - val_loss: 15.1695 - val_mean_absolute_error: 3.2331 - 34ms/epoch - 6ms/step

## Epoch 75/100

## 6/6 - 0s - loss: 44.9491 - mean_absolute_error: 4.9292 - val_loss: 14.3676 - val_mean_absolute_error: 3.1232 - 34ms/epoch - 6ms/step

## Epoch 76/100

## 6/6 - 0s - loss: 46.2250 - mean_absolute_error: 4.9832 - val_loss: 14.7970 - val_mean_absolute_error: 3.1938 - 30ms/epoch - 5ms/step

## Epoch 77/100

## 6/6 - 0s - loss: 41.5583 - mean_absolute_error: 4.7318 - val_loss: 13.7038 - val_mean_absolute_error: 2.9800 - 30ms/epoch - 5ms/step

## Epoch 78/100

## 6/6 - 0s - loss: 45.5637 - mean_absolute_error: 5.0121 - val_loss: 13.6442 - val_mean_absolute_error: 2.9538 - 31ms/epoch - 5ms/step

## Epoch 79/100

## 6/6 - 0s - loss: 42.1623 - mean_absolute_error: 4.8332 - val_loss: 13.5154 - val_mean_absolute_error: 2.9535 - 30ms/epoch - 5ms/step

## Epoch 80/100

## 6/6 - 0s - loss: 35.3987 - mean_absolute_error: 4.4426 - val_loss: 13.6741 - val_mean_absolute_error: 2.9704 - 29ms/epoch - 5ms/step

## Epoch 81/100

## 6/6 - 0s - loss: 42.9012 - mean_absolute_error: 4.8859 - val_loss: 13.9039 - val_mean_absolute_error: 3.0218 - 30ms/epoch - 5ms/step

## Epoch 82/100

## 6/6 - 0s - loss: 45.3977 - mean_absolute_error: 4.9875 - val_loss: 13.7371 - val_mean_absolute_error: 3.0010 - 30ms/epoch - 5ms/step

## Epoch 83/100

## 6/6 - 0s - loss: 40.1978 - mean_absolute_error: 4.5387 - val_loss: 13.5906 - val_mean_absolute_error: 2.9698 - 29ms/epoch - 5ms/step

## Epoch 84/100

## 6/6 - 0s - loss: 42.1860 - mean_absolute_error: 4.7813 - val_loss: 13.6459 - val_mean_absolute_error: 2.9477 - 27ms/epoch - 5ms/step

## Epoch 85/100

## 6/6 - 0s - loss: 38.7609 - mean_absolute_error: 4.6478 - val_loss: 13.6111 - val_mean_absolute_error: 2.9239 - 29ms/epoch - 5ms/step

## Epoch 86/100

## 6/6 - 0s - loss: 38.5731 - mean_absolute_error: 4.6000 - val_loss: 13.2965 - val_mean_absolute_error: 2.9083 - 29ms/epoch - 5ms/step

## Epoch 87/100

## 6/6 - 0s - loss: 42.2428 - mean_absolute_error: 4.6528 - val_loss: 13.2875 - val_mean_absolute_error: 2.9479 - 30ms/epoch - 5ms/step

## Epoch 88/100

## 6/6 - 0s - loss: 40.4984 - mean_absolute_error: 4.6650 - val_loss: 13.0986 - val_mean_absolute_error: 2.9105 - 38ms/epoch - 6ms/step

## Epoch 89/100

## 6/6 - 0s - loss: 42.3555 - mean_absolute_error: 4.7697 - val_loss: 13.3123 - val_mean_absolute_error: 2.9625 - 29ms/epoch - 5ms/step

## Epoch 90/100

## 6/6 - 0s - loss: 36.8049 - mean_absolute_error: 4.5736 - val_loss: 12.8433 - val_mean_absolute_error: 2.8263 - 27ms/epoch - 5ms/step

## Epoch 91/100

## 6/6 - 0s - loss: 42.8140 - mean_absolute_error: 4.6692 - val_loss: 12.9752 - val_mean_absolute_error: 2.9151 - 31ms/epoch - 5ms/step

## Epoch 92/100

## 6/6 - 0s - loss: 39.4167 - mean_absolute_error: 4.6356 - val_loss: 12.9761 - val_mean_absolute_error: 2.8851 - 31ms/epoch - 5ms/step

## Epoch 93/100

## 6/6 - 0s - loss: 37.2282 - mean_absolute_error: 4.6057 - val_loss: 12.8412 - val_mean_absolute_error: 2.8146 - 31ms/epoch - 5ms/step

## Epoch 94/100

## 6/6 - 0s - loss: 39.9037 - mean_absolute_error: 4.5760 - val_loss: 13.1590 - val_mean_absolute_error: 2.8801 - 32ms/epoch - 5ms/step

## Epoch 95/100

## 6/6 - 0s - loss: 38.1198 - mean_absolute_error: 4.6399 - val_loss: 13.3037 - val_mean_absolute_error: 2.8741 - 33ms/epoch - 6ms/step

## Epoch 96/100

## 6/6 - 0s - loss: 41.1695 - mean_absolute_error: 4.7062 - val_loss: 13.4858 - val_mean_absolute_error: 2.9030 - 43ms/epoch - 7ms/step

## Epoch 97/100

## 6/6 - 0s - loss: 37.2533 - mean_absolute_error: 4.3722 - val_loss: 12.9690 - val_mean_absolute_error: 2.8245 - 34ms/epoch - 6ms/step

## Epoch 98/100

## 6/6 - 0s - loss: 38.8862 - mean_absolute_error: 4.6539 - val_loss: 12.9727 - val_mean_absolute_error: 2.8281 - 35ms/epoch - 6ms/stepc(loss, mae) %<-% (model %>% evaluate(x_test, y_test, verbose = 0))

paste0("Mean absolute error on test set: ", sprintf("%.2f", mae))## [1] "Mean absolute error on test set: 2.62"3.2.8 Plot the training process

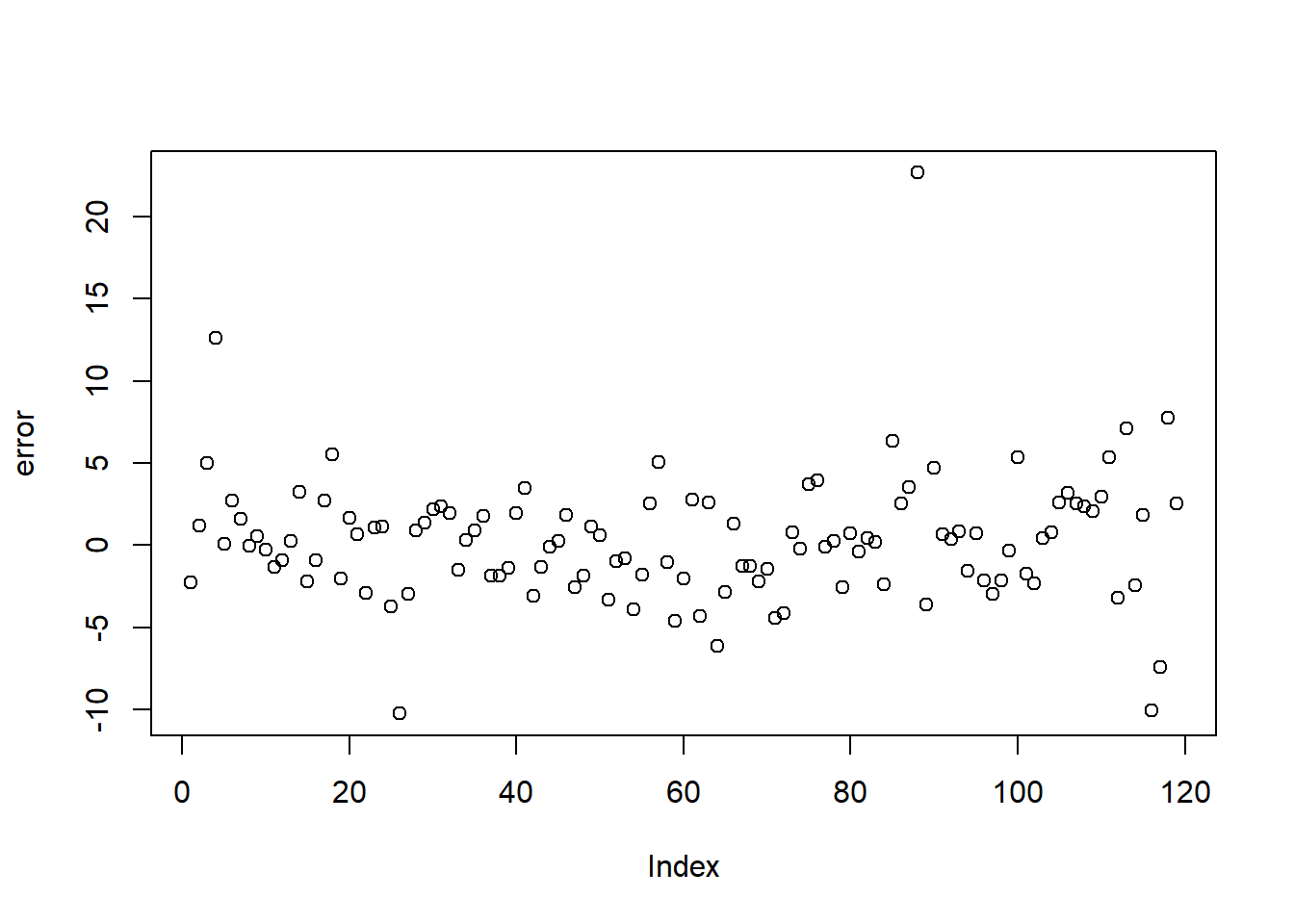

plot(history) ### Calculating the predicted values on test data

### Calculating the predicted values on test data

pred2 <- model %>%

predict(x_test) %>% k_get_value()

head(cbind(pred2,y_test))## y_test

## [1,] 23.87188 21.6

## [2,] 32.19261 33.4

## [3,] 31.18039 36.2

## [4,] 14.50413 27.1

## [5,] 14.90367 15.0

## [6,] 17.18917 19.9- calculating

mean absolute error and root mean square errorand ploting

error <- y_test-pred2

head(error)## [,1]

## [1,] -2.27187767

## [2,] 1.20738831

## [3,] 5.01961060

## [4,] 12.59587059

## [5,] 0.09632874

## [6,] 2.71082726rmse <- sqrt(mean(error)^2)

rmse## [1] 0.3127118plot(error)

3.3 Convolutional neural netwrok

3.3.2 Importing the data

mnist <- dataset_mnist()- mnist is list; it contains

trainx, trainy, testx, testy

class(mnist)## [1] "list"- the dim of “mnistx” is 60000 28 28

# head(mnist)3.3.3 preparing the data

- randomly sampling 1000 cases for training and 100 for testing

set.seed(123)

index <- sample(nrow(mnist$train$x), 1000)

x_train <- mnist$train$x[index,,]

y_train <- (mnist$train$y[index])

index <- sample(nrow(mnist$test$x), 100)

x_test <- mnist$test$x[index,,]

y_test <- (mnist$test$y[index])- dim of four data sets

dim(x_train)## [1] 1000 28 28dim(y_train)## [1] 1000dim(x_test)## [1] 100 28 28dim(y_test)## [1] 1003.3.3.1 Generate tensors

- each image is 28*28 pixel size; pass these values to computer

img_rows <- 28

img_cols <- 28- using

array_reshape()function to transformlistdata into tensors

x_train <- array_reshape(x_train,

c(nrow(x_train),

img_rows,

img_cols, 1))

x_test <- array_reshape(x_test,

c(nrow(x_test),

img_rows,

img_cols, 1))

input_shape <- c(img_rows,

img_cols, 1)- this below is tensor data

dim(x_train)## [1] 1000 28 28 13.3.3.2 Normalization and one-hot-encoded (dummy)

- training (features) data is rescaled by dividing the maxmimum to be normalized

x_train <- x_train / 255

x_test <- x_test / 255- converse targets into one-hot-encoded (dummy) type using

to_categorical()function

num_classes = 10

y_train <- to_categorical(y_train, num_classes)

y_test <- to_categorical(y_test, num_classes)y_train[1,]## [1] 0 0 0 0 0 0 1 0 0 03.3.4 Creating the model

model <- keras_model_sequential() %>%

layer_conv_2d(filters = 32,

kernel_size = c(3,3),

activation = 'relu',

input_shape = input_shape) %>%

layer_conv_2d(filters = 64,

kernel_size = c(3,3),

activation = 'relu') %>%

layer_max_pooling_2d(pool_size = c(2, 2)) %>%

layer_dropout(rate = 0.25) %>%

layer_flatten() %>%

layer_dense(units = 128,

activation = 'relu') %>%

layer_dropout(rate = 0.5) %>%

layer_dense(units = num_classes,

activation = 'softmax')- summary of model

model %>% summary()## Model: "sequential_2"

## ________________________________________________________________________________

## Layer (type) Output Shape Param #

## ================================================================================

## conv2d_1 (Conv2D) (None, 26, 26, 32) 320

## conv2d (Conv2D) (None, 24, 24, 64) 18496

## max_pooling2d (MaxPooling2D) (None, 12, 12, 64) 0

## dropout_4 (Dropout) (None, 12, 12, 64) 0

## flatten (Flatten) (None, 9216) 0

## dense_5 (Dense) (None, 128) 1179776

## dropout_3 (Dropout) (None, 128) 0

## dense_4 (Dense) (None, 10) 1290

## ================================================================================

## Total params: 1,199,882

## Trainable params: 1,199,882

## Non-trainable params: 0

## ________________________________________________________________________________3.3.5 Training

batch_size <- 128

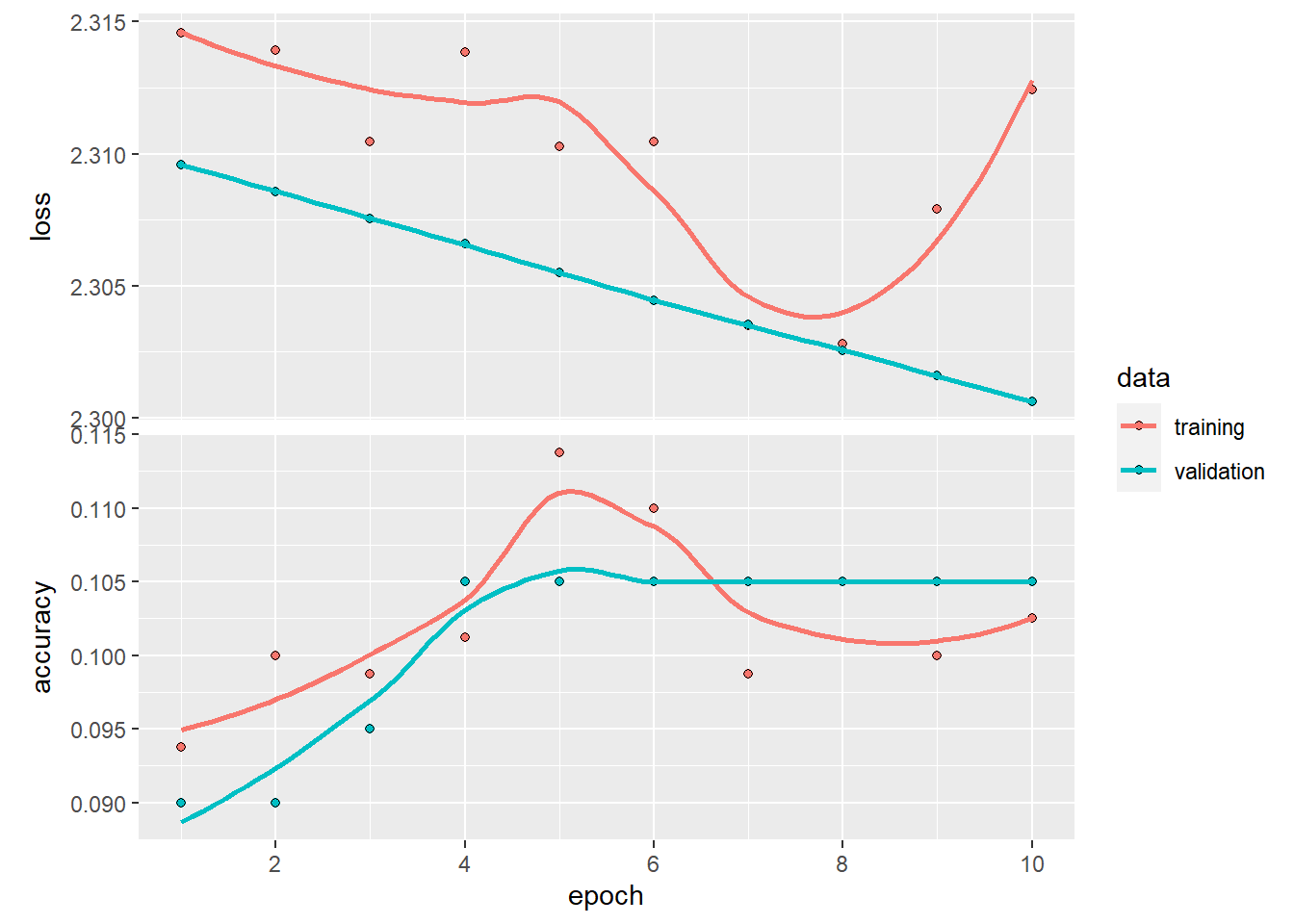

epochs <- 10

# Train model

history <- model %>% fit(

x_train, y_train,

batch_size = batch_size,

epochs = epochs,

validation_split = 0.2

)## Epoch 1/10

##

## 1/7 [===>..........................] - ETA: 3s - loss: 2.3147 - accuracy: 0.0859

## 2/7 [=======>......................] - ETA: 0s - loss: 2.3193 - accuracy: 0.0820

## 3/7 [===========>..................] - ETA: 0s - loss: 2.3129 - accuracy: 0.0885

## 4/7 [================>.............] - ETA: 0s - loss: 2.3146 - accuracy: 0.0938

## 5/7 [====================>.........] - ETA: 0s - loss: 2.3149 - accuracy: 0.0984

## 6/7 [========================>.....] - ETA: 0s - loss: 2.3147 - accuracy: 0.0951

## 7/7 [==============================] - 2s 145ms/step - loss: 2.3146 - accuracy: 0.0938 - val_loss: 2.3096 - val_accuracy: 0.0900

## Epoch 2/10

##

## 1/7 [===>..........................] - ETA: 0s - loss: 2.2889 - accuracy: 0.1797

## 2/7 [=======>......................] - ETA: 0s - loss: 2.3096 - accuracy: 0.1172

## 3/7 [===========>..................] - ETA: 0s - loss: 2.3061 - accuracy: 0.1172

## 4/7 [================>.............] - ETA: 0s - loss: 2.3120 - accuracy: 0.1074

## 5/7 [====================>.........] - ETA: 0s - loss: 2.3144 - accuracy: 0.1016

## 6/7 [========================>.....] - ETA: 0s - loss: 2.3142 - accuracy: 0.1003

## 7/7 [==============================] - 1s 128ms/step - loss: 2.3139 - accuracy: 0.1000 - val_loss: 2.3086 - val_accuracy: 0.0900

## Epoch 3/10

##

## 1/7 [===>..........................] - ETA: 0s - loss: 2.3111 - accuracy: 0.0859

## 2/7 [=======>......................] - ETA: 0s - loss: 2.3072 - accuracy: 0.1094

## 3/7 [===========>..................] - ETA: 0s - loss: 2.3127 - accuracy: 0.0990

## 4/7 [================>.............] - ETA: 0s - loss: 2.3103 - accuracy: 0.1035

## 5/7 [====================>.........] - ETA: 0s - loss: 2.3102 - accuracy: 0.1016

## 6/7 [========================>.....] - ETA: 0s - loss: 2.3094 - accuracy: 0.1016

## 7/7 [==============================] - 1s 166ms/step - loss: 2.3105 - accuracy: 0.0988 - val_loss: 2.3075 - val_accuracy: 0.0950

## Epoch 4/10

##

## 1/7 [===>..........................] - ETA: 0s - loss: 2.2972 - accuracy: 0.1016

## 2/7 [=======>......................] - ETA: 0s - loss: 2.3120 - accuracy: 0.0781

## 3/7 [===========>..................] - ETA: 0s - loss: 2.3166 - accuracy: 0.0859

## 4/7 [================>.............] - ETA: 0s - loss: 2.3148 - accuracy: 0.0879

## 5/7 [====================>.........] - ETA: 0s - loss: 2.3147 - accuracy: 0.1016

## 6/7 [========================>.....] - ETA: 0s - loss: 2.3135 - accuracy: 0.1029

## 7/7 [==============================] - 1s 128ms/step - loss: 2.3138 - accuracy: 0.1013 - val_loss: 2.3066 - val_accuracy: 0.1050

## Epoch 5/10

##

## 1/7 [===>..........................] - ETA: 0s - loss: 2.3250 - accuracy: 0.0859

## 2/7 [=======>......................] - ETA: 0s - loss: 2.3154 - accuracy: 0.1016

## 3/7 [===========>..................] - ETA: 0s - loss: 2.3104 - accuracy: 0.1094

## 4/7 [================>.............] - ETA: 0s - loss: 2.3127 - accuracy: 0.1113

## 5/7 [====================>.........] - ETA: 0s - loss: 2.3111 - accuracy: 0.1078

## 6/7 [========================>.....] - ETA: 0s - loss: 2.3098 - accuracy: 0.1133

## 7/7 [==============================] - 1s 119ms/step - loss: 2.3103 - accuracy: 0.1138 - val_loss: 2.3055 - val_accuracy: 0.1050

## Epoch 6/10

##

## 1/7 [===>..........................] - ETA: 0s - loss: 2.3161 - accuracy: 0.0703

## 2/7 [=======>......................] - ETA: 0s - loss: 2.3192 - accuracy: 0.0938

## 3/7 [===========>..................] - ETA: 0s - loss: 2.3188 - accuracy: 0.0859

## 4/7 [================>.............] - ETA: 0s - loss: 2.3124 - accuracy: 0.1133

## 5/7 [====================>.........] - ETA: 0s - loss: 2.3090 - accuracy: 0.1094

## 6/7 [========================>.....] - ETA: 0s - loss: 2.3093 - accuracy: 0.1120

## 7/7 [==============================] - 1s 114ms/step - loss: 2.3105 - accuracy: 0.1100 - val_loss: 2.3044 - val_accuracy: 0.1050

## Epoch 7/10

##

## 1/7 [===>..........................] - ETA: 0s - loss: 2.2928 - accuracy: 0.1250

## 2/7 [=======>......................] - ETA: 0s - loss: 2.3015 - accuracy: 0.0938

## 3/7 [===========>..................] - ETA: 0s - loss: 2.3058 - accuracy: 0.0885

## 4/7 [================>.............] - ETA: 0s - loss: 2.3050 - accuracy: 0.0938

## 5/7 [====================>.........] - ETA: 0s - loss: 2.3031 - accuracy: 0.1000

## 6/7 [========================>.....] - ETA: 0s - loss: 2.3046 - accuracy: 0.0951

## 7/7 [==============================] - 1s 113ms/step - loss: 2.3035 - accuracy: 0.0988 - val_loss: 2.3035 - val_accuracy: 0.1050

## Epoch 8/10

##

## 1/7 [===>..........................] - ETA: 0s - loss: 2.3094 - accuracy: 0.0781

## 2/7 [=======>......................] - ETA: 0s - loss: 2.3053 - accuracy: 0.0938

## 3/7 [===========>..................] - ETA: 0s - loss: 2.3104 - accuracy: 0.0990

## 4/7 [================>.............] - ETA: 0s - loss: 2.3083 - accuracy: 0.0918

## 5/7 [====================>.........] - ETA: 0s - loss: 2.3072 - accuracy: 0.0922

## 6/7 [========================>.....] - ETA: 0s - loss: 2.3037 - accuracy: 0.1068

## 7/7 [==============================] - 1s 113ms/step - loss: 2.3028 - accuracy: 0.1050 - val_loss: 2.3026 - val_accuracy: 0.1050

## Epoch 9/10

##

## 1/7 [===>..........................] - ETA: 0s - loss: 2.2924 - accuracy: 0.1094

## 2/7 [=======>......................] - ETA: 0s - loss: 2.2931 - accuracy: 0.1172

## 3/7 [===========>..................] - ETA: 0s - loss: 2.3012 - accuracy: 0.1172

## 4/7 [================>.............] - ETA: 0s - loss: 2.3016 - accuracy: 0.1094

## 5/7 [====================>.........] - ETA: 0s - loss: 2.3045 - accuracy: 0.1031

## 6/7 [========================>.....] - ETA: 0s - loss: 2.3075 - accuracy: 0.0990

## 7/7 [==============================] - 1s 109ms/step - loss: 2.3079 - accuracy: 0.1000 - val_loss: 2.3016 - val_accuracy: 0.1050

## Epoch 10/10

##

## 1/7 [===>..........................] - ETA: 0s - loss: 2.3022 - accuracy: 0.1094

## 2/7 [=======>......................] - ETA: 0s - loss: 2.3064 - accuracy: 0.1016

## 3/7 [===========>..................] - ETA: 0s - loss: 2.3089 - accuracy: 0.1016

## 4/7 [================>.............] - ETA: 0s - loss: 2.3101 - accuracy: 0.1055

## 5/7 [====================>.........] - ETA: 0s - loss: 2.3126 - accuracy: 0.1016

## 6/7 [========================>.....] - ETA: 0s - loss: 2.3127 - accuracy: 0.0990

## 7/7 [==============================] - 1s 107ms/step - loss: 2.3124 - accuracy: 0.1025 - val_loss: 2.3006 - val_accuracy: 0.1050plot(history)